Life at HTIC

HTIC is a healthcare research lab inside IIT Research park. I have joined Team SSR(spine surgical robotics) as a final-year intern. I have worked for almost a year and a half over here as a robotic engineer. The lab is run by professor Dr. Mohanasankar Sivaprakasam, most of my work include writing motion planning algorithms for collision free path travel of the robot.

The Journey

It all started with applying for machine learning position for a robotic company. I know sounds wierd but that’s how it started. I was working all the time, under the guidance of an MS Scholar named Shyam A. The perk I got working in the team is the flexibility to learn something new in the first couple of months. I always had a soft corner for healthcare applications and products.

The learning

During the first couple of months, I got to learn about working in ROS and packages along with it(MoveIt, FastIK, RViz). This is like full-time thing, which is so exciting to see you working robotics in simulation and parallely implement on the real robot.

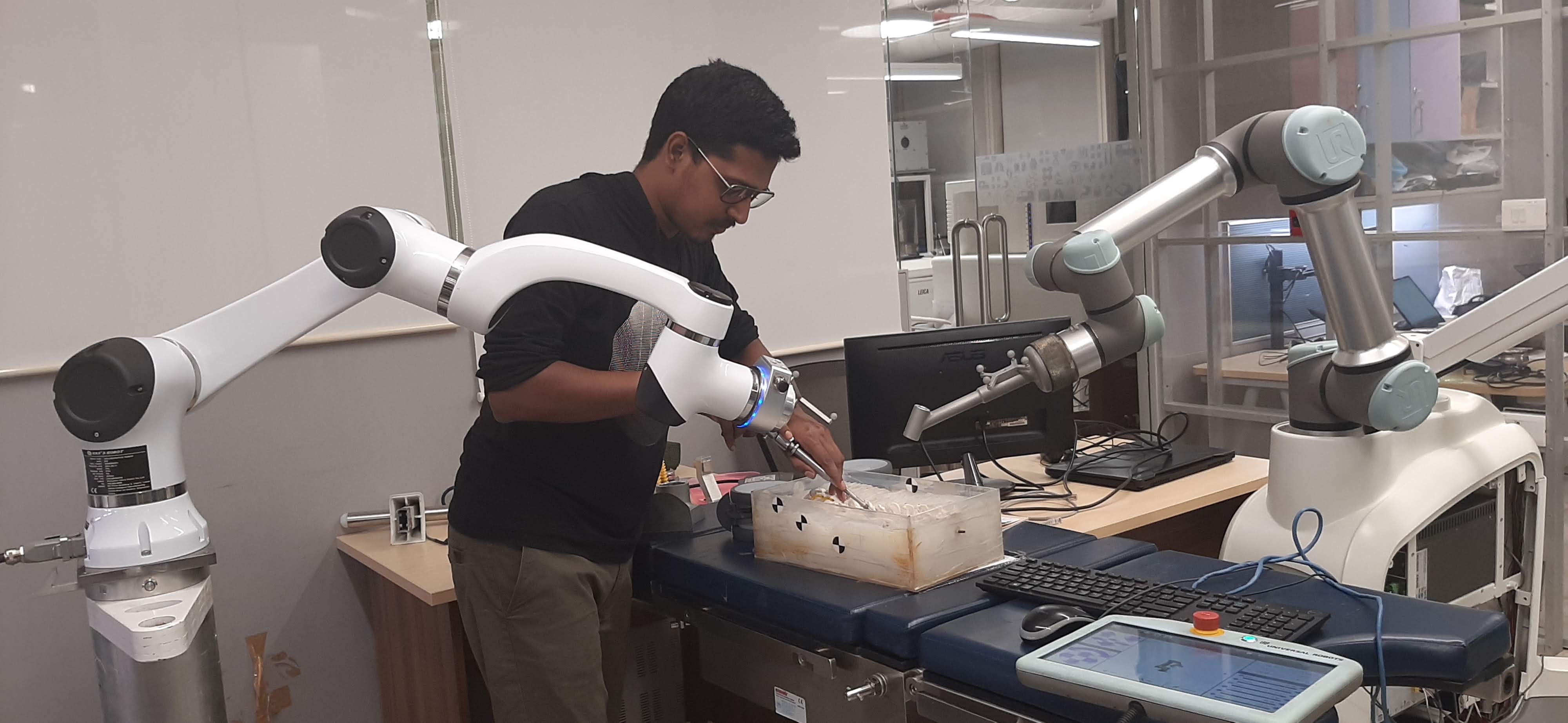

The perk of being in the office and working is you can start moving with the real robot. I have personally worked with 4 different robots, namely, Elfin, UR, KUKA, and Staubli. I have worked with both Cobot and Industrial robots. The company has enough funds to bring in and spend on new robots, It doesn’t purchase all of them, but the company rents them. Yet a few robots, the company owns it.

So after working with robots for 6 months, I started digging into the application part. I was given the task to design the motion planning of the robot for spine surgical application. We already have a motion planning code, but we wanted it to be fast and the code to be feasible to adopt for more robots.

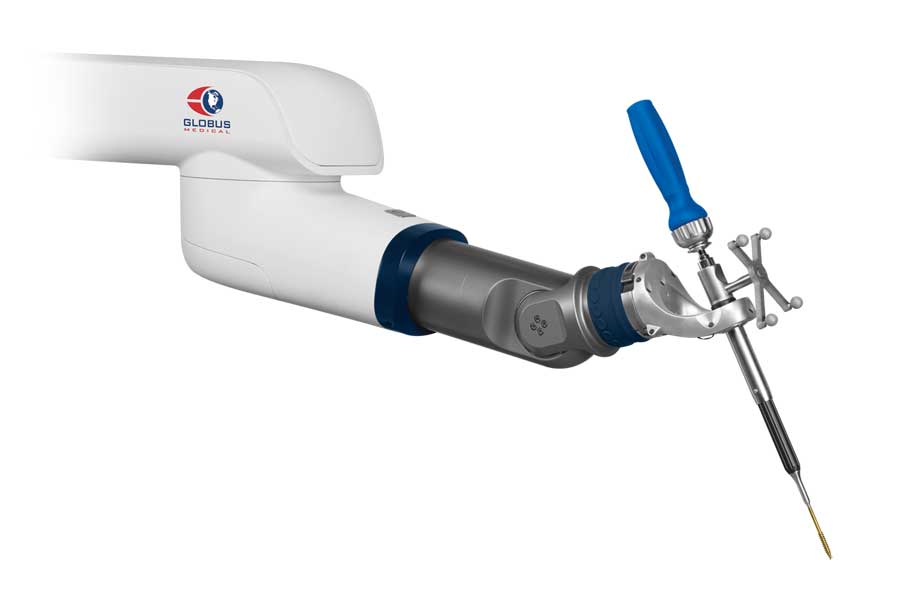

The application is simple, imagine drilling a needle into the wall with a bare hand. you see the needle can tumble and go in the wrong direction. Here doctors do the same thing but in the spinal cord. Since the spine is connected to the brain, if the needle insertion is not accurate, things go bad. Imagine you have a holder like an extra arm to hold the screwdriver, all you need is to rotate it. The one which holds the driver is the robot here. This is what the robot does, simply comes and holds the screwdriver for the doctor.

Take a clean look at the picture, seems simple right, but not, one major enemy is collision during planning, we do not have a lot of cams, around, and the robot should plan the trajectory using IR markers. Collision include

- Robot - Needles on the body

- Robot - Robot

- Robot - Doctor

- Robot - Patient

We need to track all of them and do motion planning, so we built software that can replicate the real-time simulation inside RViz and used the collision libraries to check. We have checked the collisions and they made proper collision-free trajectories. I have helped my peer Aswath Govid, to write motion planning software.

Even though I was working with really good robots and people around, somewhere deep down I wanted to work with Images and Neural Nets. Whenever someone says about it, the desire to work with it grew stronger, right at that moment I got an opportunity to work with Detect Tech as a CV-Deep Learning Engineer-I.

Enjoy Reading This Article?

Here are some more articles you might like to read next: